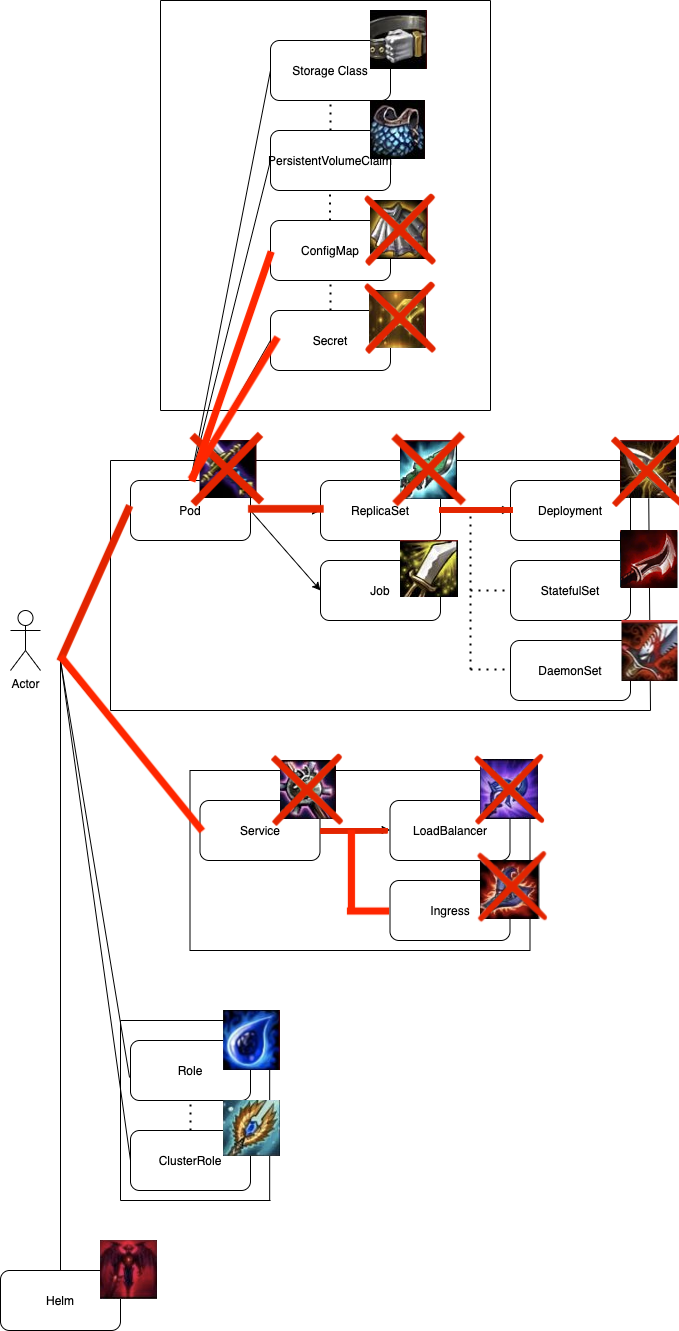

在分享完Pod, ReplicaSet與Deployment三祖孫的故事並學會如何使用後,接下來要分享給大家如何依照策略的將pod部署在特定節點上,讓我們可以因應不同的情境選擇不一樣的佈署策略。

如同字面上意思,Affinity 與 Anti-Affinity指的是親和性調度與反親和性調度,也就是滿足特定條件後,(不)將Pod調度到特定群集上。

節點調度與驅逐策略當中,最簡單的方式。藉由給定Node指定的標籤,讓Pod可以只在特定節點上運行。

首先,我們一樣準備一個三節點的乾淨Cluster。(也就是先前練習用的Cluster,並清空之前練習留下的resources)

$ kubectl get pod

No resources found in default namespace.

$ kubectl get node

NAME STATUS ROLES AGE VERSION

gke-my-first-cluster-1-default-pool-dddd2fae-j0k1 Ready <none> 11d v1.18.6-gke.3504

gke-my-first-cluster-1-default-pool-dddd2fae-rfl8 Ready <none> 11d v1.18.6-gke.3504

gke-my-first-cluster-1-default-pool-dddd2fae-tz38 Ready <none> 11d v1.18.6-gke.3504

在某一Node上加上特定標籤env:stg

kubectl label nodes <node_name> =

$ kubectl label nodes gke-my-first-cluster-1-default-pool-dddd2fae-tz38 env=stg

node/gke-my-first-cluster-1-default-pool-dddd2fae-tz38 labeled

將nodeSelector 加到pod(deployment)中

這邊我們以repository的deployment為例

apiVersion: apps/v1

kind: Deployment

metadata:

name: ironman

labels:

name: ironman

app: ironman

spec:

minReadySeconds: 5

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

selector:

matchLabels:

app: ironman

replicas: 1

template:

metadata:

labels:

app: ironman

spec:

nodeSelector:

env: stg

containers:

- name: ironman

image: ghjjhg567/ironman:latest

imagePullPolicy: Always

ports:

- containerPort: 8100

resources:

limits:

cpu: "1"

memory: "2Gi"

requests:

cpu: 500m

memory: 256Mi

envFrom:

- secretRef:

name: ironman-config

command: ["./docker-entrypoint.sh"]

- name: redis

image: redis:4.0

imagePullPolicy: Always

ports:

- containerPort: 6379

- name: nginx

image: nginx

imagePullPolicy: Always

ports:

- containerPort: 80

volumeMounts:

- mountPath: /etc/nginx/nginx.conf

name: nginx-conf-volume

subPath: nginx.conf

readOnly: true

- mountPath: /etc/nginx/conf.d/default.conf

subPath: default.conf

name: nginx-route-volume

readOnly: true

readinessProbe:

httpGet:

path: /v1/hc

port: 80

initialDelaySeconds: 5

periodSeconds: 10

volumes:

- name: nginx-conf-volume

configMap:

name: nginx-config

- name: nginx-route-volume

configMap:

name: nginx-route-volume

再來我們將加上nodeSelector的deployment部署到cluster上

$ kubectl get pod --watch

NAME READY STATUS RESTARTS AGE

ironman-7c7d8dd78-b2zp5 3/3 Running 0 24s

我們最後來分析一下加上nodeSelector的pod吧!!

在下方能看見我們的三個replicas都只運行在加上label的節點,這也證實nodeSelector確實能work

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

ironman-7c7d8dd78-b2zp5 0/3 ContainerCreating 0 5s

$ kubectl describe pod ironman-7c7d8dd78-b2zp5

Name: ironman-7c7d8dd78-b2zp5

Namespace: default

Priority: 0

Node: gke-my-first-cluster-1-default-pool-dddd2fae-tz38/10.140.0.3

Start Time: Mon, 05 Oct 2020 17:50:32 +0800

Labels: app=ironman

接下來就是NodeAffinity,它算是進階版的NodeSelector,NodeAffinity除了上述Selector功能外,NodeAffinity還有著兩種不同的策略,分別是requiredDuringSchedulingIgnoredDuringExecution 和 preferredDuringSchedulingIgnoredDuringExecution,也就是硬親和與軟親和。

我們將上面yaml中的nodeSelector —> nodeAffinity

apiVersion: apps/v1

kind: Deployment

metadata:

name: ironman

labels:

name: ironman

app: ironman

spec:

minReadySeconds: 5

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

selector:

matchLabels:

app: ironman

replicas: 1

template:

metadata:

labels:

app: ironman

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: env

operator: In

values:

- test

- uat

containers:

- name: ironman

image: ghjjhg567/ironman:latest

imagePullPolicy: Always

ports:

- containerPort: 8100

resources:

limits:

cpu: "1"

memory: "2Gi"

requests:

cpu: 500m

memory: 256Mi

envFrom:

- secretRef:

name: ironman-config

command: ["./docker-entrypoint.sh"]

- name: redis

image: redis:4.0

imagePullPolicy: Always

ports:

- containerPort: 6379

- name: nginx

image: nginx

imagePullPolicy: Always

ports:

- containerPort: 80

volumeMounts:

- mountPath: /etc/nginx/nginx.conf

name: nginx-conf-volume

subPath: nginx.conf

readOnly: true

- mountPath: /etc/nginx/conf.d/default.conf

subPath: default.conf

name: nginx-route-volume

readOnly: true

readinessProbe:

httpGet:

path: /v1/hc

port: 80

initialDelaySeconds: 5

periodSeconds: 10

volumes:

- name: nginx-conf-volume

configMap:

name: nginx-config

- name: nginx-route-volume

configMap:

name: nginx-route-volume

這邊可以發現,我們的剛加上硬親和性的Pod是起不來的,因為我們在上面給的label為env: stg,但是硬親和性的規則是要env:test 或env:uat

$ kubectl apply -f deployment.yaml

NAME READY UP-TO-DATE AVAILABLE AGE

ironman 0/1 1 0 17s

kubectl get pod --watch

NAME READY STATUS RESTARTS AGE

ironman-6d655d444d-gffx6 2/3 Terminating 0 9m32s

ironman-db66975d8-rtvgg 0/3 Pending 0 21s

ironman-6d655d444d-gffx6 0/3 Terminating 0 9m36s

ironman-6d655d444d-gffx6 0/3 Terminating 0 9m48s

ironman-6d655d444d-gffx6 0/3 Terminating 0 9m48s

ironman-db66975d8-rtvgg 0/3 Pending 0 37s

那我們再來將硬親和性的operator value加上stg,並且再加上軟親和性。

Tips: 這邊的weight很重要!

apiVersion: apps/v1

kind: Deployment

metadata:

name: ironman

labels:

name: ironman

app: ironman

spec:

minReadySeconds: 5

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

selector:

matchLabels:

app: ironman

replicas: 1

template:

metadata:

labels:

app: ironman

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: env

operator: In

values:

- test

- stg

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: priority

operator: In

values:

- first

containers:

- name: ironman

image: ghjjhg567/ironman:latest

imagePullPolicy: Always

ports:

- containerPort: 8100

resources:

limits:

cpu: "1"

memory: "2Gi"

requests:

cpu: 500m

memory: 256Mi

envFrom:

- secretRef:

name: ironman-config

command: ["./docker-entrypoint.sh"]

- name: redis

image: redis:4.0

imagePullPolicy: Always

ports:

- containerPort: 6379

- name: nginx

image: nginx

imagePullPolicy: Always

ports:

- containerPort: 80

volumeMounts:

- mountPath: /etc/nginx/nginx.conf

name: nginx-conf-volume

subPath: nginx.conf

readOnly: true

- mountPath: /etc/nginx/conf.d/default.conf

subPath: default.conf

name: nginx-route-volume

readOnly: true

readinessProbe:

httpGet:

path: /v1/hc

port: 80

initialDelaySeconds: 5

periodSeconds: 10

volumes:

- name: nginx-conf-volume

configMap:

name: nginx-config

- name: nginx-route-volume

configMap:

name: nginx-route-volume

執行後可以發現,我們已經滿足硬親和性,並且即使尚未滿足軟親和性還是會create pod。

$ kubectl apply -f deployment.yaml

deployment.apps/ironman created

$ kubectl get pod --watch

NAME READY STATUS RESTARTS AGE

ironman-5bb87585db-pqp8b 0/3 ContainerCreating 0 3s

ironman-5bb87585db-pqp8b 2/3 Running 0 8s

ironman-5bb87585db-pqp8b 2/3 Running 0 14s

ironman-5bb87585db-pqp8b 3/3 Running 0 18s

ironman-5bb87585db-pqp8b 3/3 Running 0 18s

那假使有另個Node是同時滿足硬親和同時滿足硬親和與軟親和的話,他的優先序會在只滿足硬親和的Node之前嗎?

$ kubectl label nodes gke-my-first-cluster-1-default-pool-dddd2fae-rfl8 env=stg

node/gke-my-first-cluster-1-default-pool-dddd2fae-rfl8 labeled

$ kubectl label nodes gke-my-first-cluster-1-default-pool-dddd2fae-rfl8 priority=first

node/gke-my-first-cluster-1-default-pool-dddd2fae-rfl8 labeled

$ kubectl apply -f deployment.yaml

deployment.apps/ironman created

那我們來看看結果吧!結論就是在都滿足硬親和的條件下,Kubernetes scheduler會依照加上的weight分數來決定部署在哪個node上。

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

ironman-9c45876f7-ztskh 3/3 Running 0 39s

$ kubectl describe pod ironman-9c45876f7-ztskh

Name: ironman-9c45876f7-ztskh

Namespace: default

Priority: 0

Node: gke-my-first-cluster-1-default-pool-dddd2fae-rfl8/10.140.0.2

Start Time: Mon, 05 Oct 2020 18:16:40 +0800

Labels: app=ironman

pod-template-hash=9c45876f7

Annotations: <none>

Status: Running

IP: 10.0.0.25

IPs:

與NodeAffinity相似,但層級變成了Pod,並且Pod還有著PodAntiAffinity反親和性。

在下面兩個不同node中,我們可以拿兩者都有的labels來做範例

$ kubectl get node gke-my-first-cluster-1-default-pool-dddd2fae-j0k1 --show-labels

NAME STATUS ROLES AGE VERSION LABELS

gke-my-first-cluster-1-default-pool-dddd2fae-j0k1 Ready <none> 11d v1.18.6-gke.3504 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/instance-type=g1-small,beta.kubernetes.io/os=linux,cloud.google.com/gke-nodepool=default-pool,cloud.google.com/gke-os-distribution=cos,cloud.google.com/machine-family=g1,failure-domain.beta.kubernetes.io/region=asia-east1,failure-domain.beta.kubernetes.io/zone=asia-east1-a,kubernetes.io/arch=amd64,kubernetes.io/hostname=gke-my-first-cluster-1-default-pool-dddd2fae-j0k1,kubernetes.io/os=linux,node.kubernetes.io/instance-type=g1-small,topology.kubernetes.io/region=asia-east1,topology.kubernetes.io/zone=asia-east1-a

$ kubectl get nodes gke-my-first-cluster-1-default-pool-dddd2fae-rfl8 --show-labels

NAME STATUS ROLES AGE VERSION LABELS

gke-my-first-cluster-1-default-pool-dddd2fae-rfl8 Ready <none> 11d v1.18.6-gke.3504 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/instance-type=g1-small,beta.kubernetes.io/os=linux,cloud.google.com/gke-nodepool=default-pool,cloud.google.com/gke-os-distribution=cos,cloud.google.com/machine-family=g1,env=stg,failure-domain.beta.kubernetes.io/region=asia-east1,failure-domain.beta.kubernetes.io/zone=asia-east1-a,kubernetes.io/arch=amd64,kubernetes.io/hostname=gke-my-first-cluster-1-default-pool-dddd2fae-rfl8,kubernetes.io/os=linux,node.kubernetes.io/instance-type=g1-small,priority=first,topology.kubernetes.io/region=asia-east1,topology.kubernetes.io/zone=asia-east1-a

在這兩個node中,我們發現他們都有著label "kubernetes.io/hostname" 並且兩者的值分別為gke-my-first-cluster-1-default-pool-dddd2fae-j0k1與nodes gke-my-first-cluster-1-default-pool-dddd2fae-rfl8。假設有a, b, c三個pod,若a, c在kubernetes.io/hostname: gke-my-first-cluster-1-default-pool-dddd2fae-j0k1,b在kubernetes.io/hostname: gke-my-first-cluster-1-default-pool-dddd2fae-rfl8上,則稱b與ac不同topology。

老樣子先來個yaml,這邊可以發現我們有個ironman-1的deployment,且他想親和有著標籤ironman:one或ironman:three的pod,並且想遠離ironman:two的pod。那接著我們再來兩個yaml分別為ironman-2與ironman-3 做測試。

ironman-1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: ironman-1

labels:

name: ironman

app: ironman

spec:

minReadySeconds: 5

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

selector:

matchLabels:

app: ironman

replicas: 1

template:

metadata:

labels:

app: ironman

ironman: one

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: ironman

operator: In

values:

- one

- three

topologyKey: kubernetes.io/hostname

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: ironman

operator: In

values:

- two

topologyKey: kubernetes.io/hostname

containers:

- name: ironman

image: ghjjhg567/ironman:latest

imagePullPolicy: Always

ports:

- containerPort: 8100

envFrom:

- secretRef:

name: ironman-config

command: ["./docker-entrypoint.sh"]

- name: redis

image: redis:4.0

imagePullPolicy: Always

ports:

- containerPort: 6379

- name: nginx

image: nginx

imagePullPolicy: Always

ports:

- containerPort: 80

volumeMounts:

- mountPath: /etc/nginx/nginx.conf

name: nginx-conf-volume

subPath: nginx.conf

readOnly: true

- mountPath: /etc/nginx/conf.d/default.conf

subPath: default.conf

name: nginx-route-volume

readOnly: true

readinessProbe:

httpGet:

path: /v1/hc

port: 80

initialDelaySeconds: 5

periodSeconds: 10

volumes:

- name: nginx-conf-volume

configMap:

name: nginx-config

- name: nginx-route-volume

configMap:

name: nginx-route-volume

ironman-2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: ironman-2

labels:

name: ironman

app: ironman

spec:

minReadySeconds: 5

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

selector:

matchLabels:

app: ironman

replicas: 1

template:

metadata:

labels:

app: ironman

ironman: two

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: ironman

operator: In

values:

- two

topologyKey: kubernetes.io/hostname

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: ironman

operator: In

values:

- one

- three

topologyKey: kubernetes.io/hostname

containers:

- name: ironman

image: ghjjhg567/ironman:latest

imagePullPolicy: Always

ports:

- containerPort: 8100

envFrom:

- secretRef:

name: ironman-config

command: ["./docker-entrypoint.sh"]

- name: redis

image: redis:4.0

imagePullPolicy: Always

ports:

- containerPort: 6379

- name: nginx

image: nginx

imagePullPolicy: Always

ports:

- containerPort: 80

volumeMounts:

- mountPath: /etc/nginx/nginx.conf

name: nginx-conf-volume

subPath: nginx.conf

readOnly: true

- mountPath: /etc/nginx/conf.d/default.conf

subPath: default.conf

name: nginx-route-volume

readOnly: true

readinessProbe:

httpGet:

path: /v1/hc

port: 80

initialDelaySeconds: 5

periodSeconds: 10

volumes:

- name: nginx-conf-volume

configMap:

name: nginx-config

- name: nginx-route-volume

configMap:

name: nginx-route-volume

ironman-3.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: ironman-3

labels:

name: ironman

app: ironman

spec:

minReadySeconds: 5

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

selector:

matchLabels:

app: ironman

replicas: 1

template:

metadata:

labels:

app: ironman

ironman: three

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: ironman

operator: In

values:

- one

- three

topologyKey: kubernetes.io/hostname

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: ironman

operator: In

values:

- two

topologyKey: kubernetes.io/hostname

containers:

- name: ironman

image: ghjjhg567/ironman:latest

imagePullPolicy: Always

ports:

- containerPort: 8100

envFrom:

- secretRef:

name: ironman-config

command: ["./docker-entrypoint.sh"]

- name: redis

image: redis:4.0

imagePullPolicy: Always

ports:

- containerPort: 6379

- name: nginx

image: nginx

imagePullPolicy: Always

ports:

- containerPort: 80

volumeMounts:

- mountPath: /etc/nginx/nginx.conf

name: nginx-conf-volume

subPath: nginx.conf

readOnly: true

- mountPath: /etc/nginx/conf.d/default.conf

subPath: default.conf

name: nginx-route-volume

readOnly: true

readinessProbe:

httpGet:

path: /v1/hc

port: 80

initialDelaySeconds: 5

periodSeconds: 10

volumes:

- name: nginx-conf-volume

configMap:

name: nginx-config

- name: nginx-route-volume

configMap:

name: nginx-route-volume

並且分別佈署它們

$ kubectl apply -f ironman-1.yaml

deployment.apps/ironman-1 created

$ kubectl apply -f ironman-2.yaml

deployment.apps/ironman-2 created

$ kubectl apply -f ironman-3.yaml

deployment.apps/ironman-3 created

這時我們理想狀態應該是1與3在同個node上,並且2獨自在另個node上,那我們來驗證一下吧~

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

ironman-1-59cc5784ff-l9lh6 3/3 Running 0 41s

ironman-2-67647c9b7c-5n6f4 3/3 Running 0 34s

ironman-3-5797656cf5-662td 3/3 Running 0 29s

$ kubectl describe pod ironman-1-59cc5784ff-l9lh6

Name: ironman-1-59cc5784ff-l9lh6

Namespace: default

Priority: 0

Node: gke-my-first-cluster-1-default-pool-dddd2fae-j0k1/10.140.0.4

Start Time: Mon, 05 Oct 2020 19:20:14 +0800

kubectl describe pod ironman-2-67647c9b7c-5n6f4

Name: ironman-2-67647c9b7c-5n6f4

Namespace: default

Priority: 0

Node: gke-my-first-cluster-1-default-pool-dddd2fae-tz38/10.140.0.3

$ kubectl describe pod ironman-3-5797656cf5-662td

Name: ironman-3-5797656cf5-662td

Namespace: default

Priority: 0

Node: gke-my-first-cluster-1-default-pool-dddd2fae-j0k1/10.140.0.4

那最後結果也似乎與我們預期相同,這也驗證了親和性與反親和性的功能確實可以運作。

但一定會有機率是無法同時滿足podAffinity與podAntiAffinity的情況,這時候就是weight出現的時候了,同樣會算出滿足親和性條件下,反親和分數最高的topology去部署

原本想在這章節一起介紹Taints and Tolerations,但後來介紹Affinity親和性時,發現篇幅有點過長,因此將Taints污點放在下個章節單獨介紹Orz,有興趣的朋友麻煩再參閱Day-24囉。